Sensor Fusion in Action

Ever wonder how humanoid robots manage to balance, walk, and interact with their surroundings? The answer lies in sensor fusion. But what exactly is it, and why is it so crucial for humanoid robots?

By Kevin Lee

Humanoid robots are no longer just a sci-fi dream. They're real, and they're getting better at mimicking human movement every day. But behind their smooth motions and uncanny balance lies a complex web of sensors working together in harmony. This is where sensor fusion comes into play. It's the secret sauce that allows humanoid robots to process data from multiple sensors and make sense of their environment.

In this article, we'll dive into how sensor fusion works, why it's essential for humanoid robot design, and how it helps these robots achieve more lifelike motion. Whether you're a tech enthusiast, a robotics pro, or just curious about the future of humanoids, this one's for you.

What is Sensor Fusion?

Let's start with the basics. Sensor fusion is the process of combining data from multiple sensors to create a more accurate and reliable understanding of the environment. Think of it like this: if you were walking down the street blindfolded, you'd rely on your other senses—hearing, touch, maybe even smell—to navigate. But if you could see, hear, and feel at the same time, you'd have a much clearer picture of your surroundings. That's what sensor fusion does for robots.

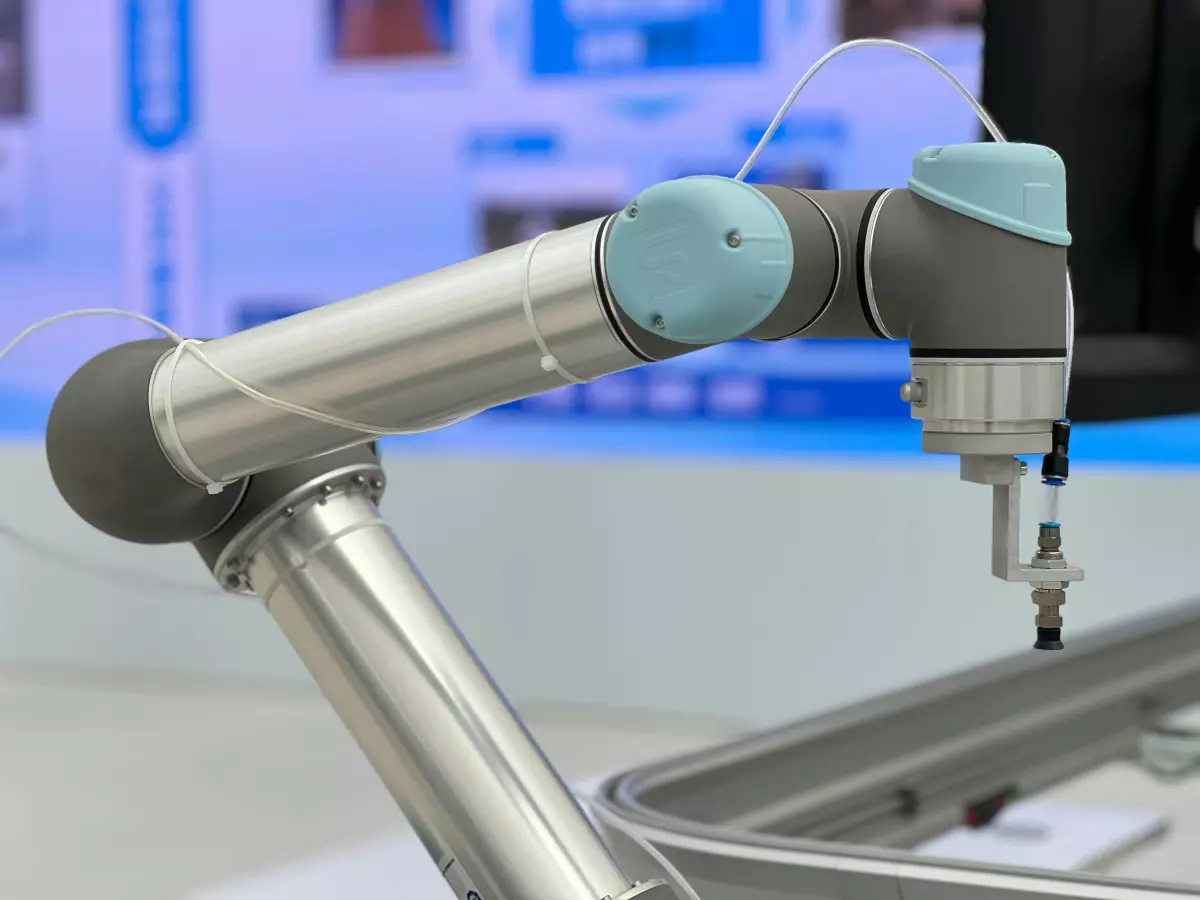

Humanoid robots are equipped with a variety of sensors, including cameras, gyroscopes, accelerometers, and pressure sensors. Each of these sensors provides a different type of data. Cameras give visual information, gyroscopes measure orientation, accelerometers detect movement, and pressure sensors gauge force. Individually, these sensors are useful, but when their data is combined through sensor fusion, the robot can make more informed decisions about how to move and interact with its environment.

Why is Sensor Fusion Crucial for Humanoid Robots?

Humanoid robots are designed to move and interact with the world in ways that mimic humans. But unlike us, they don't have a brain that automatically processes sensory information. Instead, they rely on algorithms to interpret data from their sensors. Without sensor fusion, a robot might struggle to balance or misinterpret its surroundings, leading to clunky, unnatural movements.

For example, when a humanoid robot walks, it needs to constantly adjust its balance to avoid falling over. This requires real-time data from multiple sensors—like accelerometers and gyroscopes—to determine its position and orientation. Sensor fusion algorithms take this data, combine it, and make sense of it, allowing the robot to adjust its posture and maintain balance. Without this, the robot would be as wobbly as a toddler taking its first steps.

How Sensor Fusion Enhances Motion Control

Motion control is one of the most challenging aspects of humanoid robot design. Unlike wheeled robots, humanoids need to walk, run, jump, and even climb stairs. This requires precise control over their limbs and joints, as well as the ability to adapt to changing environments.

Sensor fusion plays a key role in motion control by providing the robot with a comprehensive understanding of its surroundings. For instance, when a humanoid robot encounters an obstacle, its cameras might detect the object, while its pressure sensors gauge the force needed to step over it. The robot's motion control algorithms then use this fused data to plan the most efficient and stable movement.

In addition to helping robots navigate obstacles, sensor fusion also improves their ability to interact with humans. For example, when a humanoid robot shakes hands with a person, its pressure sensors detect the force of the handshake, while its cameras analyze the person's facial expressions. The robot can then adjust its grip and respond appropriately, creating a more natural interaction.

Challenges in Sensor Fusion for Humanoids

While sensor fusion is a game-changer for humanoid robots, it's not without its challenges. One of the biggest hurdles is processing the massive amount of data generated by multiple sensors in real-time. Humanoid robots need to make split-second decisions to maintain balance and avoid obstacles, which requires fast and efficient data processing.

Another challenge is ensuring that the data from different sensors is accurate and reliable. If one sensor provides faulty data, it can throw off the entire sensor fusion process, leading to errors in motion control. To address this, engineers are developing more sophisticated algorithms that can filter out noise and prioritize the most important data.

The Future of Sensor Fusion in Humanoid Robots

As sensor technology continues to advance, we can expect even more impressive developments in humanoid robot design. New types of sensors, such as tactile sensors that mimic human skin, could provide robots with an even greater understanding of their environment. Meanwhile, improvements in sensor fusion algorithms will allow robots to process data faster and more accurately, resulting in smoother, more lifelike movements.

Ultimately, sensor fusion is the key to unlocking the full potential of humanoid robots. By combining data from multiple sensors, these robots can move, balance, and interact with the world in ways that were once thought impossible. And as the technology continues to evolve, the line between human and robot movement will only get blurrier.

So, the next time you see a humanoid robot walking down the street, remember: it's not just about the hardware. It's the magic of sensor fusion that's making it all possible.