Inside the Machine

Ever wondered how humanoid robots manage to move so smoothly and react to their environment like humans? It all comes down to one thing: sensor integration. And if you're not paying attention to how these sensors are designed and placed, you're missing out on the real magic behind humanoid robotics.

By Jason Patel

Humanoid robots are designed to mimic human motion, but it’s not just about making them look like us. The real challenge lies in giving them the ability to sense and respond to their surroundings in real-time. This is where sensor integration comes into play. Without a well-thought-out sensor system, your humanoid robot is basically a glorified mannequin. So, how do engineers pull this off? Let’s dive into the fascinating world of sensor integration in humanoid robots and explore the design choices that make these machines truly remarkable.

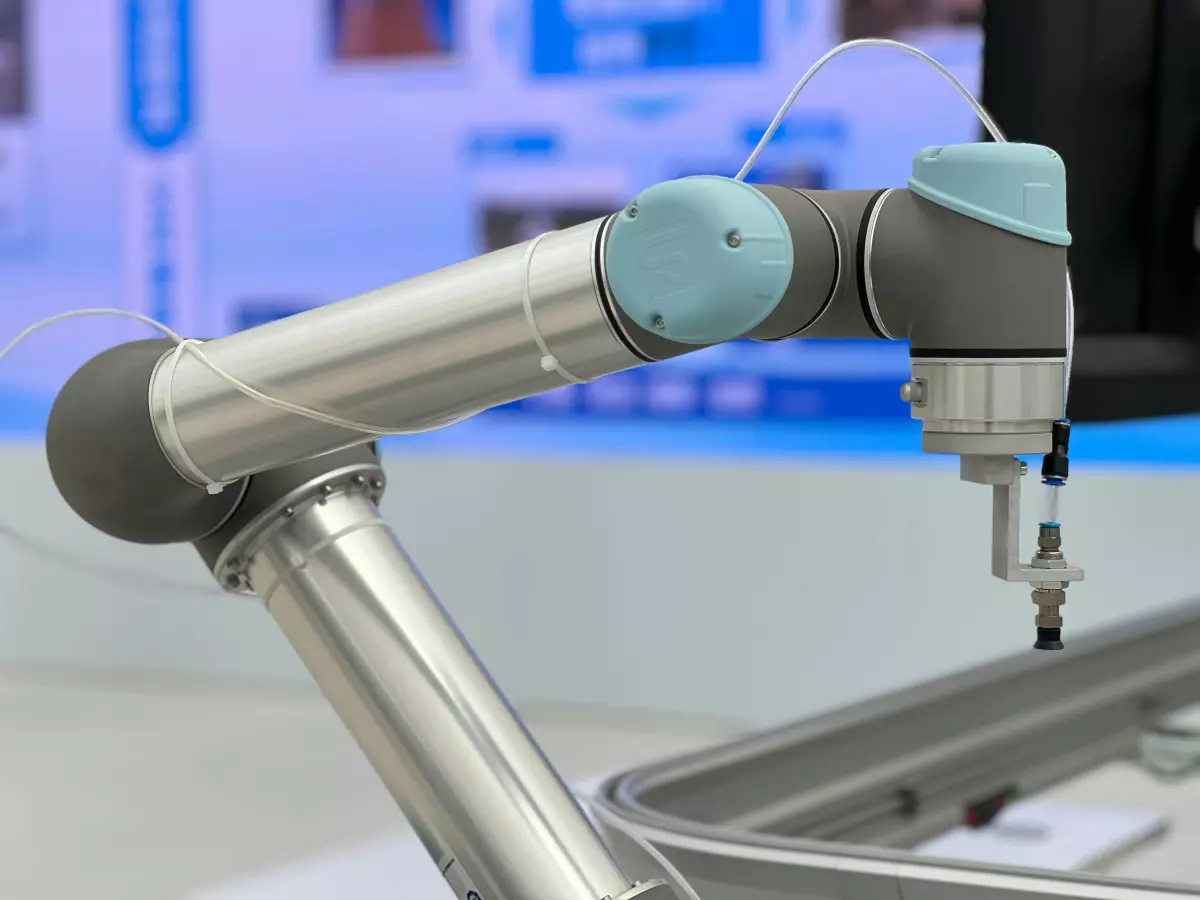

First off, let’s talk about the types of sensors used in humanoid robots. You’ve got your basic sensors like cameras for vision, microphones for hearing, and gyroscopes for balance. But that’s just scratching the surface. Advanced humanoid robots also use touch sensors, pressure sensors, and even temperature sensors to interact with their environment in a more human-like way. These sensors are strategically placed all over the robot's body to give it a full range of sensory input, much like our own nervous system.

But here’s the kicker: it’s not just about slapping a bunch of sensors onto a robot and calling it a day. The real challenge is in how these sensors communicate with each other and with the robot’s control algorithms. This is where sensor fusion comes into play. Sensor fusion is the process of combining data from multiple sensors to create a more accurate and reliable understanding of the robot’s environment. For example, a humanoid robot might use both its cameras and gyroscopes to figure out its position in space. By fusing these two data streams, the robot can make more informed decisions about how to move and interact with objects.

Now, let’s talk about sensor placement. You might think that placing sensors on a humanoid robot is as simple as copying human anatomy, but it’s actually much more complicated than that. For example, while humans have eyes on the front of their heads, some humanoid robots have cameras placed on their shoulders or even their chests. Why? Because these positions offer a wider field of view, which is crucial for tasks like object recognition and navigation. Similarly, touch sensors are often placed on a robot’s hands and feet, but they can also be found on other parts of the body to give the robot a better sense of balance and spatial awareness.

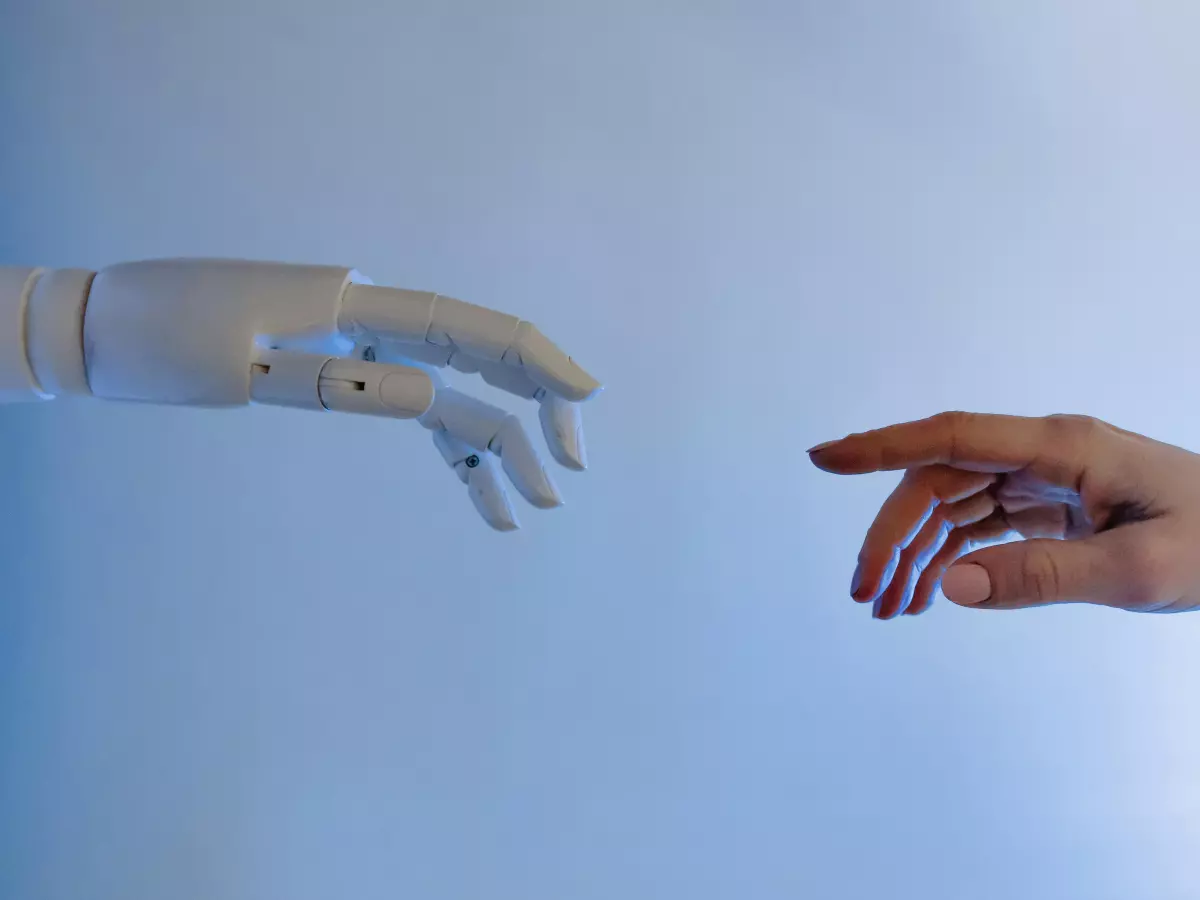

Another key aspect of sensor integration is the use of feedback loops. In a humanoid robot, sensors don’t just passively collect data—they actively influence the robot’s movements. For example, if a robot’s touch sensors detect that it’s about to bump into something, the control algorithm can adjust the robot’s movements in real-time to avoid a collision. This kind of feedback loop is essential for creating robots that can operate safely and autonomously in dynamic environments.

But sensor integration isn’t just about avoiding obstacles. It’s also about enabling more complex behaviors. For example, some humanoid robots are equipped with sensors that allow them to detect changes in temperature or pressure. This can be useful for tasks like picking up fragile objects or interacting with humans in a more natural way. By integrating these sensors into the robot’s control system, engineers can create machines that are not only more capable but also more intuitive to interact with.

Of course, all of this sensor data has to be processed in real-time, which brings us to the role of motion control algorithms. These algorithms are responsible for translating the raw data from the sensors into meaningful actions. For example, if a robot’s gyroscope detects that it’s losing balance, the motion control algorithm can adjust the robot’s posture to prevent it from falling over. Similarly, if the robot’s cameras detect an object in its path, the algorithm can plan a new route to avoid it. The more sophisticated the motion control algorithm, the more lifelike the robot’s movements will be.

So, what’s the takeaway here? If you’re designing a humanoid robot, sensor integration should be at the top of your priority list. It’s not just about giving your robot the ability to see, hear, and touch—it’s about making sure all of these sensory inputs work together seamlessly. This requires careful planning, strategic sensor placement, and advanced motion control algorithms. But when done right, the result is a machine that can move, react, and interact with its environment in ways that are almost indistinguishable from a human.

In conclusion, sensor integration is the unsung hero of humanoid robot design. Without it, even the most advanced robots would be little more than glorified statues. But with the right sensors in the right places, and the right algorithms to process the data, humanoid robots can achieve truly lifelike behavior. So, the next time you see a humanoid robot in action, remember: it’s not just about the hardware—it’s about the sensors and algorithms working behind the scenes to make it all possible.

And if you’re in the business of designing humanoid robots, don’t sleep on sensor integration. It’s the key to unlocking the full potential of these incredible machines.