Autonomy vs. Safety

Are we really ready to trust robots with full autonomy? The idea of robots making decisions on their own is exciting, but let's be honest—it's also a little terrifying. The more freedom we give them, the more we have to worry about safety. And here's the kicker: autonomy and safety often pull in opposite directions.

By Hiroshi Tanaka

Think about it. The more autonomous a robot becomes, the more it has to make decisions without human intervention. But with great autonomy comes great responsibility—especially when it comes to safety. So, how do engineers design robots that can make their own decisions while ensuring they don’t, you know, accidentally run over your dog or knock over a priceless vase?

Autonomy: The Dream

Autonomy in robotics is the holy grail. Imagine robots that can navigate complex environments, make real-time decisions, and adapt to new situations without any human input. That’s the dream, right? But autonomy isn't just about giving robots free rein. It’s about creating systems that allow robots to operate independently while still achieving specific goals.

To achieve this, robots need sophisticated control systems, advanced sensors, and powerful algorithms. These systems allow them to perceive their environment, process information, and make decisions. But here's the catch: the more complex the environment, the harder it is for robots to make safe decisions. And that’s where safety comes into play.

Safety: The Non-Negotiable

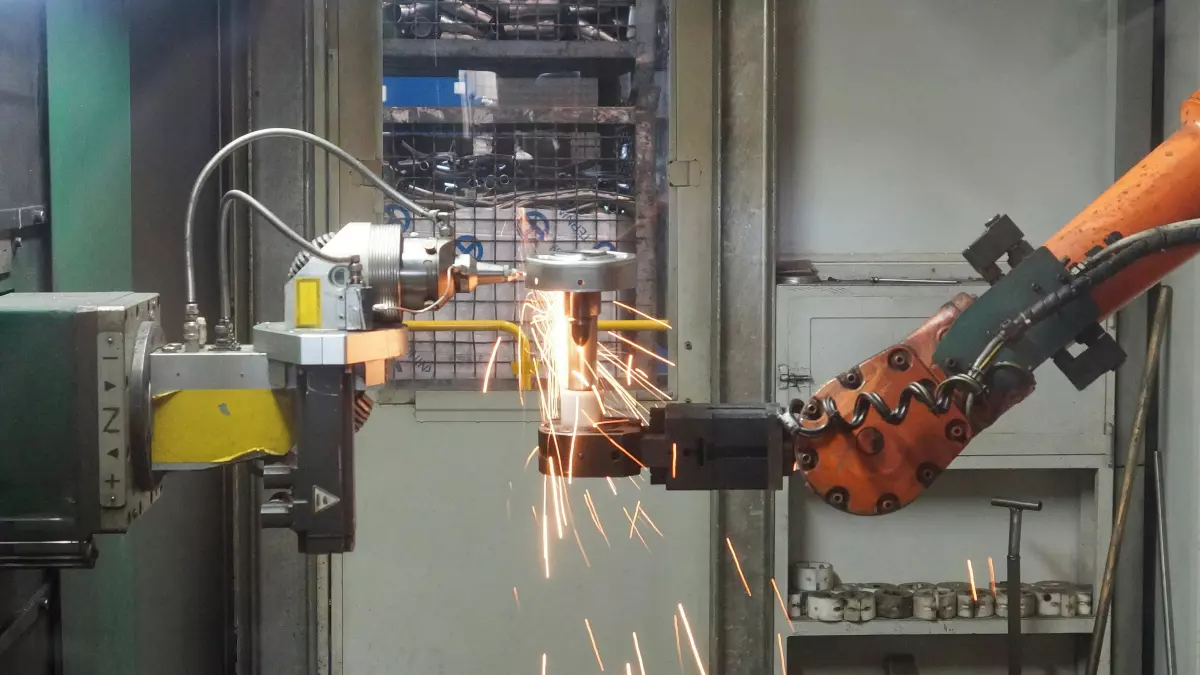

Safety is a dealbreaker. No matter how autonomous a robot is, if it can’t operate safely, it’s a no-go. Safety in robotics is all about minimizing risk—both to humans and to the robot itself. This means that robots need to be equipped with fail-safes, redundancies, and emergency stop mechanisms. But it’s not just about physical safety. Cybersecurity is also a huge concern. After all, what good is a robot that can navigate a warehouse if a hacker can take control of it?

One of the biggest challenges in designing safe robots is creating systems that can predict and avoid dangerous situations. This requires a combination of real-time data processing, machine learning, and, in some cases, human oversight. But balancing autonomy and safety is no easy task. The more autonomous a robot is, the harder it is to predict how it will behave in every possible situation.

Finding the Sweet Spot

So, how do we find the sweet spot between autonomy and safety? One approach is to limit the robot’s autonomy in certain situations. For example, autonomous cars often have “human-in-the-loop” systems that allow a human driver to take control in case of an emergency. Similarly, many industrial robots are designed to operate autonomously in controlled environments but require human supervision in more complex or unpredictable settings.

Another approach is to build robots with multiple layers of safety. This can include everything from physical barriers (like sensors that stop the robot from moving if it gets too close to a human) to software-based safety systems (like algorithms that predict and avoid dangerous situations).

The Future of Safe Autonomy

As robots become more autonomous, the need for robust safety systems will only increase. Engineers are already working on new technologies that will allow robots to operate safely in even the most complex environments. These include everything from advanced machine learning algorithms that allow robots to predict and avoid dangerous situations to new types of sensors that can detect potential hazards in real-time.

In the end, the key to balancing autonomy and safety in robotics is finding the right mix of technology and human oversight. And while we may not be there yet, one thing is clear: the future of robotics will be both autonomous and safe—or it won’t be at all.