Beyond Sensors

If you think sensors are the magic ingredient that makes robots smart, you're missing the bigger picture. While sensors are crucial, they’re far from the whole story.

By Alex Rivera

Picture this: You’re driving down a foggy road, visibility is near zero, and your car’s sensors are going haywire. The radar is pinging, the cameras are useless, and the LiDAR is struggling to make sense of the environment. But you, the human driver, still manage to navigate through the fog, relying on your instincts, experience, and a bit of intuition. Now, imagine a robot in the same situation. Would it be able to do the same? Probably not.

That’s because, unlike humans, robots rely heavily on sensors to perceive the world. But here’s the kicker: Sensors alone aren’t enough. Just like you need more than your eyes to drive through fog, robots need more than just sensors to achieve true autonomy. They need something much more sophisticated—a brain that can process, adapt, and make decisions in real-time, even when the data is incomplete or unreliable.

Why Sensors Aren’t Enough

Let’s start with the basics. Sensors are the eyes and ears of a robot. They gather data from the environment—whether it’s visual information from cameras, distance measurements from LiDAR, or tactile feedback from touch sensors. But here’s the thing: Sensors are only as good as the data they collect. And in the real world, data is messy.

Think about it. Sensors can be blocked, damaged, or misinterpret their surroundings. A camera might be blinded by the sun, a LiDAR sensor might struggle in heavy rain, and a microphone might pick up background noise instead of the sound it’s supposed to detect. In these cases, the robot is essentially flying blind. And that’s where the real problem lies.

Without a brain capable of interpreting and compensating for incomplete or faulty data, the robot is stuck. It can’t make decisions, it can’t adapt, and it certainly can’t achieve true autonomy. In other words, sensors alone won’t cut it.

The Role of the Robot Brain

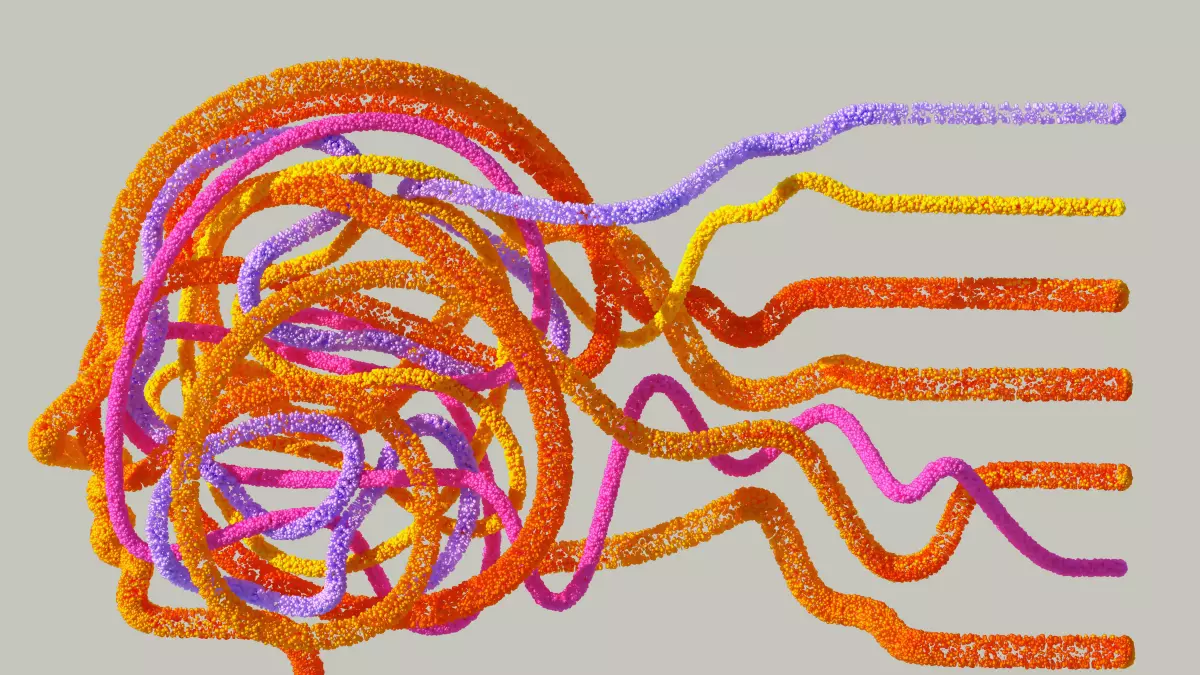

So, if sensors aren’t enough, what’s the missing piece? The answer lies in the robot’s brain—specifically, its control systems and algorithms. These are the systems that take raw sensor data and turn it into actionable information. They allow the robot to make sense of its environment, even when the data is noisy or incomplete.

For example, let’s go back to the foggy road scenario. A human driver can rely on experience and intuition to navigate through the fog. A robot, on the other hand, needs a brain that can process multiple streams of data, filter out the noise, and make educated guesses about what’s happening in the environment. This requires advanced algorithms, machine learning models, and real-time decision-making capabilities.

In technical terms, this is known as sensor fusion. It’s the process of combining data from multiple sensors to create a more accurate and reliable picture of the environment. But sensor fusion alone isn’t enough. The robot also needs predictive models that can anticipate what might happen next, as well as control systems that can adjust the robot’s behavior in real-time.

From Perception to Action

But here’s where it gets even more interesting. Once the robot has processed the sensor data and made sense of its environment, it still needs to decide what to do next. This is where the control systems come into play. These systems are responsible for turning perception into action.

For example, let’s say a robot is navigating through a cluttered room. Its sensors detect a chair in its path, and its brain processes this information to determine that the chair is an obstacle. But now what? The robot needs to decide whether to go around the chair, move it, or stop altogether. This decision-making process is driven by the robot’s control systems, which take into account not only the sensor data but also the robot’s goals, constraints, and current state.

In other words, the robot’s brain isn’t just about perception—it’s also about action. And this is where things get really tricky. The robot needs to be able to make decisions in real-time, often with incomplete or unreliable data. It needs to be able to adapt to changing conditions, anticipate future events, and adjust its behavior accordingly. And all of this needs to happen in a matter of milliseconds.

Why True Autonomy Is So Hard

By now, you’re probably starting to see why true autonomy is so difficult to achieve. It’s not just about having the right sensors or even the right algorithms. It’s about having a brain that can process massive amounts of data in real-time, make sense of that data, and then turn it into actionable decisions—all while dealing with the messy, unpredictable nature of the real world.

And that’s not even the hardest part. The real challenge is creating a system that can learn and adapt over time. Just like a human driver gets better with experience, a robot needs to be able to learn from its mistakes and improve its performance. This requires advanced machine learning techniques, as well as a deep understanding of how the robot’s control systems interact with its environment.

In short, true autonomy is about much more than just sensors. It’s about creating a brain that can think, learn, and adapt in real-time. And while we’re getting closer to achieving this, there’s still a long way to go.

The Future of Robot Brains

So, what does the future hold for robot brains? Well, we’re already seeing some exciting developments in the field of artificial intelligence and machine learning. These technologies are allowing robots to become more autonomous, more adaptable, and more intelligent. But there’s still a lot of work to be done.

One of the biggest challenges is creating systems that can operate in the real world, with all its unpredictability and complexity. This requires not only better sensors but also more advanced control systems, algorithms, and machine learning models. It’s a tough problem, but one that researchers and engineers are actively working on.

In the meantime, don’t be fooled into thinking that sensors alone are the key to robotic intelligence. They’re just one piece of the puzzle. The real magic happens in the robot’s brain.

And that’s where the future of robotics lies.